Cornell’s Center for Data Science for Enterprise and Society’s Data Science Distinguished Lecture Series invites top tier faculty and industry leaders from around the world who are making ground breaking contributions in data science. Invitee’s are curated and selected by an advisory board to the Center. The lectures are a cross-campus event, where each seminar is co-hosted with a flagship lecture series at Cornell, working cooperatively with the Bowers College of Computing and Information Science (including the deparments of Computer Science, Information Science, and Statistics and Data Science), The Schools of Operations Research & Information Engineering and Electrical & Computer Engineering in the College of Engineering, and the Departments of Mathematics and of Economics in the College of Arts and Sciences. The mission of the Center is to provdie a focal point for data science work, both methodological and application domain-driven. The audience for these lectures span all of Cornell and, given the breadth of the audience, the talks are meant to by accessible to a wide range of graduate students and faculty working in data science.

SPRING 2023

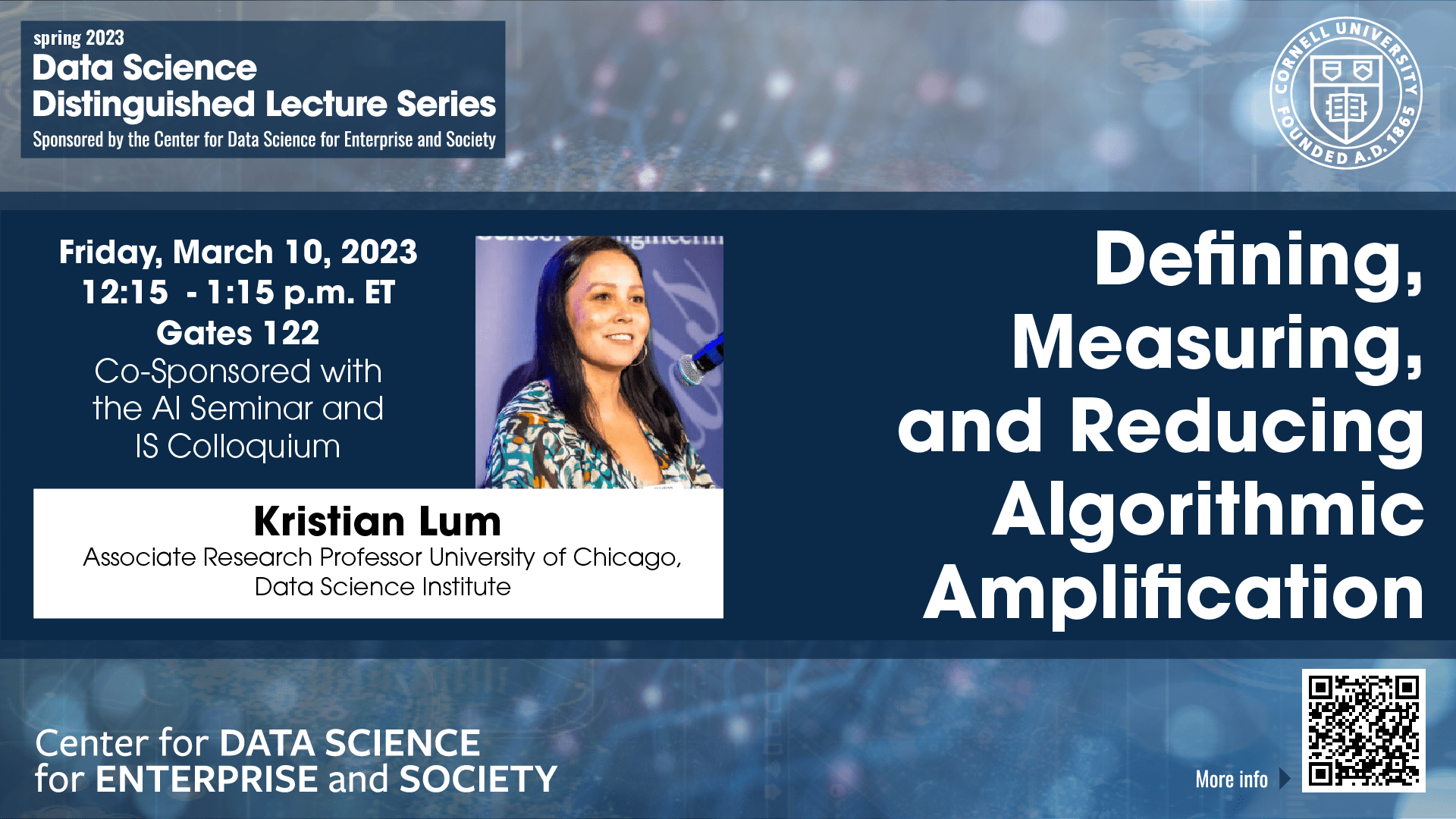

Friday, March 10

Kristian Lum

University of Chicago Data Science Institute

Title: Defining, Measuring, and Reducing Algorithmic Amplification

Co-sponsored with the AI Seminar and IS Colloquium

BIO Kristian Lum is an Associate Research Professor at the University of Chicago Data Science Institute. Previously, she was a Sr. Staff Machine Learning Researcher at Twitter where she led research as part of the Machine Learning Ethics, Transparency, and Accountability (META) team. She is a founding member of the ACM Conference on Fairness, Accountability, and Transparency and has served in various leadership roles since its inception, growing this community of scholars and practitioners who care about the responsible use of machine learning systems, and she is a recent recipient of the COPSS Emerging Leaders Award and NSF Kavli Fellow. Her research looks into (un)fairness of predictive models with particular attention to those used in a criminal justice setting.

ABSTRACT As people consume more content delivered by recommender systems, it has become increasingly important to understand how content is amplified by these recommendations. Much of the dialogue around algorithmic amplification implies that the algorithm is a single machine learning model acting on a neutrally defined, immutable corpus of content to be recommended. However, there are several other components of the system that are not traditionally considered part of the algorithm that influence what ends up on a user’s content feed. In this talk, I will enumerate some of these components that influence algorithmic amplification and discuss how these components can contribute to amplification and simultaneously confound its measurement. I will then discuss several proposals for mitigating unwanted amplification, even when it is difficult to measure precisely.

ABSTRACT One of the most ancient sensory functions, vision emerged in prehistoric animals more than 540 million years ago. Since then animals, empowered first by the ability to perceive the world, and then to move around and change the world, developed more and more sophisticated intelligence systems, culminating in human intelligence. Throughout this process, visual intelligence has been a cornerstone of animal intelligence. Enabling machines to see is hence a critical step toward building intelligent machines. In this talk, I will explore a series of projects with my students and collaborators, all aiming to develop intelligent visual machines using machine learning and deep learning methods. I begin by explaining how neuroscience and cognitive science inspired the development of algorithms that enabled computers to see what humans see. Then I discuss intriguing limitations of human visual attention and how we can develop computer algorithms and applications to help, in effect allowing computers to see what humans don’t see. Yet this leads to important social and ethical considerations about what we do not want to see or do not want to be seen, inspiring work on privacy computing in computer vision, as well as the importance of addressing data bias in vision algorithms. Finally I address the tremendous potential and opportunity to develop smart cameras and robots that help people see or do what we want machines’ help seeing or doing, shifting the narrative from AI’s potential to replace people to AI’s opportunity to help people. We present our work in ambient intelligence in healthcare as well as household robots as examples of AI’s potential to augment human capabilities. Last but not least, the cumulative observations of developing AI from a human-centered perspective has led to the establishment of Stanford’s Institute for Human-centered AI (HAI). I will showcase a small sample of interdisciplinary projects supported by HAI.

BIO Dr. Fei-Fei Li is the inaugural Sequoia Professor in the Computer Science Department at Stanford University and Co-Director of Stanford’s Human-Centered AI Institute. She served as the Director of Stanford’s AI Lab from 2013 to 2018. From January 2017 to September 2018, she was Vice President at Google and served as Chief Scientist of AI/ML at Google Cloud. Dr. Fei-Fei Li obtained her B.A. degree in physics from Princeton in 1999 with High Honors and her Ph.D. degree in electrical engineering from California Institute of Technology (Caltech) in 2005.

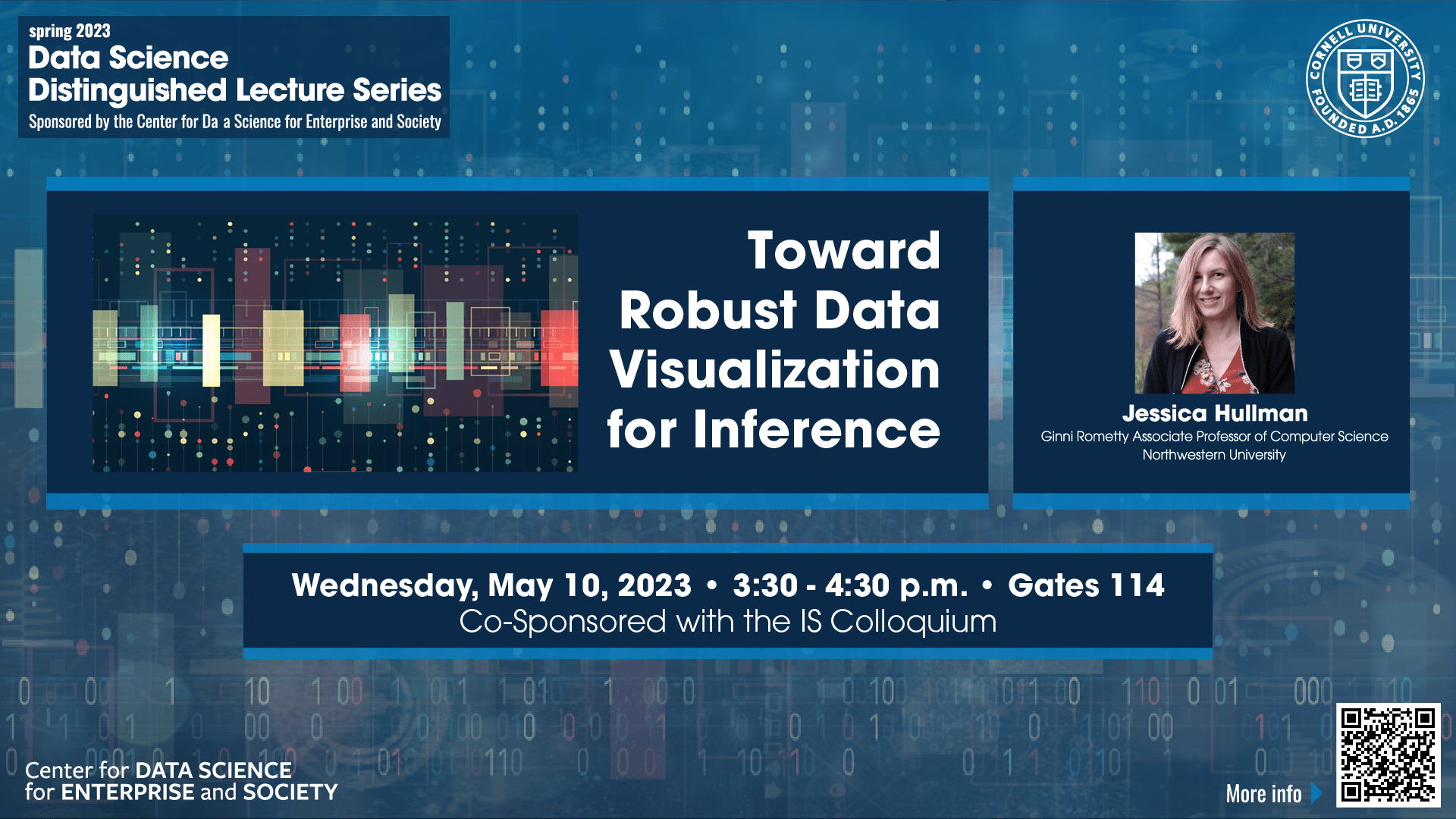

Wednesday, May 10 – 3:30 – 4:30 pm, Gates 114

Jessica Hullman

Northwestern University

Title: Toward Robust Data Visualization for Inference

Co-sponsored with the IS Colloquium

ABSTRACT Research and development in computer science and statistics have produced increasingly sophisticated software interfaces for interactive visual data analysis, and data visualizations have become ubiquitous in communicative contexts like news and scientific publishing. However, despite these successes, our understanding of how to design robust visualizations for data-driven inference remains limited. For example, designing visualizations to maximize perceptual accuracy and users’ reported satisfaction can lead people to adopt visualizations that promote overconfident interpretations. Design philosophies that emphasize data exploration and hypothesis generation over other phases of analysis can encourage pattern-finding over sensitivity analysis and quantification of uncertainty. I will motivate alternative objectives for measuring the value of a visualization, and describe design approaches that better satisfy these objectives. I will discuss how the concept of a model check can help bridge traditionally exploratory and confirmatory activities, and suggest new directions for software and empirical research.

BIO Dr. Jessica Hullman is the Ginni Rometty Associate Professor of Computer Science at Northwestern University. Her research addresses challenges that arise when people draw inductive inferences from data interfaces. Hullman’s work has contributed visualization techniques, applications, and evaluative frameworks for improving data-driven inference in applications like visual data analysis, data communication, privacy budget setting, and responsive design. Her current interests include theoretical frameworks for formalizing and evaluate the value of a better interface and elicitation of domain knowledge for data analysis. Hullman’s work has been awarded best paper awards at top visualization and HCI venues. She is the recipient of a Microsoft Faculty Fellowship (2019) and NSF CAREER, Medium, and Small awards as PI, among others.